The Generative AI Disclosure Dilemma

I recently spoke at an internal Balfour Beatty Investments conference where I spoke about our AI strategy, and someone asked me… “Should you declare if you’re using AI to generate content?” It also came up when I rambled at Multiverse students on an internal panel discussion on AI’s potential impact on the employment market, and again this week on an internal call with some Balfour Beatty employees.

My answer varied each time, as honestly, I don’t know. I also don’t think it would be enforceable in any way. However, it’s currently often pretty obvious when AI has been used in its raw form, particularly in list articles and opinion pieces… we’re onto you, ‘5 things you should consider…’ writers 😉 I see the same on my LinkedIn feed, in Medium articles and Reddit posts, and it keeps crossing my mind – maybe there are contexts where disclosure is necessary, and more to the point, ethical?

I recently watched some videos where new business owners described using ChatGPT to write whole books on particular subjects, specifically targeting particular markets and demographics, sometimes putting the name of a human author on a book entirely written by an AI tool. To me, that feels disingenuous at best.

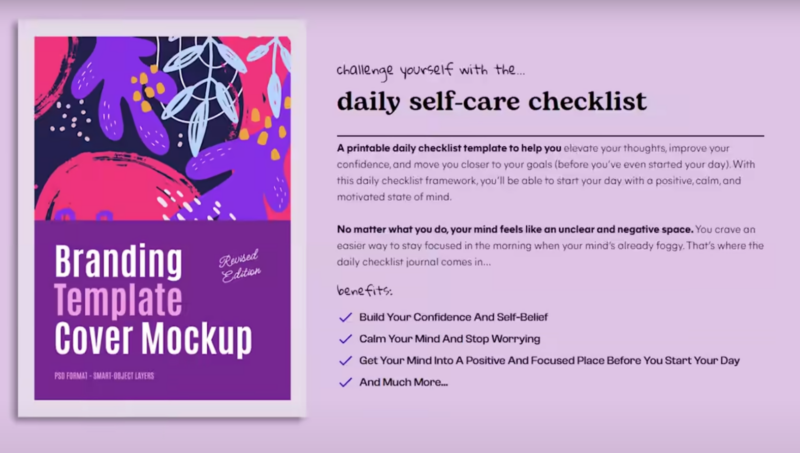

This video was genuinely a bit troubling. It tells the story of a young guy who claims to have made more than $1m selling entirely AI-generated books. Several of the books are targeted at the white-middle-class-suburban-mum self-help market, presenting self-help advice on improving self-confidence and calming minds.

There’s a lot of breathless excitement online about these sorts of startups, but I struggle to find any inspiration in a business model rooted in churning out generative AI content and wrapped in sophisticated online marketing techniques. The naked focus on profit and marketing, rather than quality content, is pretty disconcerting.

AI and Creative Boundaries

I also know a ton of people who work in the creative industries, and this keeps coming up. Should a photographer declare if they’ve edited a photo using Stable Diffusion and Inpainting or used Adobe Firefly to create fake backgrounds or generate whole images?

A friend suggested that this is an extension of the never-ending debates around Photoshop use and disclosure in the photography world, and he probably has a point. However, Photoshop is complex to learn and the barrier to entry is much lower today. The new breed of free (or very cheap) tools require little to no skill to use. In some contexts, I think that’s great, particularly when it enables non-technical users to use historically complex tools in areas like data analysis, but I’m not so sure the same applies when generating creative or expert advice content and then passing it off as being created by a human.

There’s also the ongoing issue of if/how we should apply 300-year-old copyright laws to training AI models. One of my musician friends calls them ‘plagiarism machines,’ and it’s difficult (albeit not impossible) to credibly argue against that point of view. These tools don’t really ‘copy’ anything, and I’m not sure if it’s any different to someone staring at the Mona Lisa for hours and then recreating it at home.

Outright copying an artwork and then passing it off as the original is fraud, but I’m unsure if the same applies to an AI model. The context is very different, plus there’s no passing off as Da Vinci… he clearly didn’t use MidJourney. These tools don’t ‘store’ a copy of their training data in their models, so if there’s no copying, does copyright apply? I currently err towards the view that it doesn’t, albeit I reserve the right to change my mind, potentially tomorrow. 🤷♂️ 😉

Originality in a generative AI world

It’s important to say that I’m a huge advocate of using generative AI. I use them daily, mostly to help with work productivity and, more recently, to generate images for these blogs. I’ve fine-tuned my own, and I have a custom AI running at home called METIS (formerly Falcon) which I use as a place to experiment and sanity check ideas before taking them any further.

It’s important to say that I’m a huge advocate of using generative AI. I use them daily, mostly to help with work productivity and, more recently, to generate images for these blogs. I’ve fine-tuned my own, and I have a custom AI running at home called METIS (formerly Falcon) which I use as a place to experiment and sanity check ideas before taking them any further.

However, it increasingly feels like there’s a boundary – if content is supposed to reflect personal viewpoints, or represent expert advice, or express artistic creation in some way.. but you haven’t created it yourself… it okay to claim you’re the author? That doesn’t sit right with me.

At the moment, my writing is a ‘no-AI’ zone. I’ve never written articles before or made a concerted effort to engage with platforms like LinkedIn, and I’m enjoying the experience of wittering on at people using my keyboard too much. The last thing I want to is hand it over to a generative AI model.

Each person has their own approach I guess, but I can’t help but wonder why ‘writers’ wouldn’t choose to do their own writing. It’s a bit like forming a garage band with your mates and then outsourcing the songwriting (and instrument-playing) to someone else. It feels wrong and surely misses the point (and the fun) in the act of creation.

The potential consequences for the future of creative industries troubles me a bit (sometimes an awful lot), and I’m curious about what other people think. What are your thoughts on balancing AI assistance with human creative content generation?

I’m conscious that I’m not offering any answers here, and this article is full of question marks, so I’ll follow up with a part 2 when I’ve thought about it more and have more concrete views. I suspect the public wants to see transparency around corporate AI use, but does the same apply to individuals in the creative industries? It probably does up to a point (per the Photoshop debate), although I increasingly think the context matters.

We’re right at the beginning of a whole new approach to content creation, and I think it challenges the ‘normal’ and traditional ideas of authenticity and originality. The questions I keep being asked about disclosure might not just be about transparency but also about the ethical use of AI technology, and how humans present and express their creativity.

Are you really being creative if you’re just steering an algorithm?